In September 2025, Google Research published findings on what they call a "Personal Health Agent", a multi-specialist AI system designed to analyze wearable data, interpret blood biomarkers, and provide evidence-based health coaching. The research represents one of the most comprehensive evaluations of AI health agents to date, involving nearly 1,200 users and over 1,100 hours of expert validation.

The timing is notable. While Google's work remains a research prototype with no product timeline, companies like Syd Life AI are already deploying similar AI-powered health platforms to thousands of users, while others like Ultrahuman are building comprehensive hardware-software ecosystems that generate the multimodal data these AI agents need to function. The gap between laboratory validation and real-world deployment raises important questions about what we're actually buying when we invest in AI health guidance. And also, what risks we may not yet fully understand.

The Multi-Agent Architecture: Learning from Human Teams

Google's core insight challenges conventional AI development: instead of building one powerful model to handle all health queries, they created three specialist agents working in concert.

The Data Science Agent functions as a personal analyst, interpreting ambiguous questions like "Am I getting more fit?" and translating them into statistically valid analysis plans. In benchmarks, it outperformed baseline AI models by 41% in generating quality analyses, a meaningful improvement when decisions affect your health protocols.

The Domain Expert Agent serves as a medical knowledge base, grounded in authoritative sources like the NCBI research database. Rather than simply retrieving information, it personalizes recommendations based on individual health profiles and existing conditions.

The Health Coach Agent bridges knowing and doing, employing psychological frameworks like motivational interviewing to support behavior change through natural conversation.

When these three specialists collaborate under an intelligent orchestrator that dynamically assigns roles based on query complexity, they consistently outperform both single AI systems and even parallel multi-agent setups without coordination. The validation involved over 7,000 expert annotations across 10 benchmark tasks. This level of rigor has rarely been seen in consumer health tech.

The Ecosystem Approach: Hardware Meets Intelligence

While Google focuses on the AI architecture for processing health data, companies like Ultrahuman are tackling the equally critical challenge: generating high-quality, multimodal data for these systems to analyze.

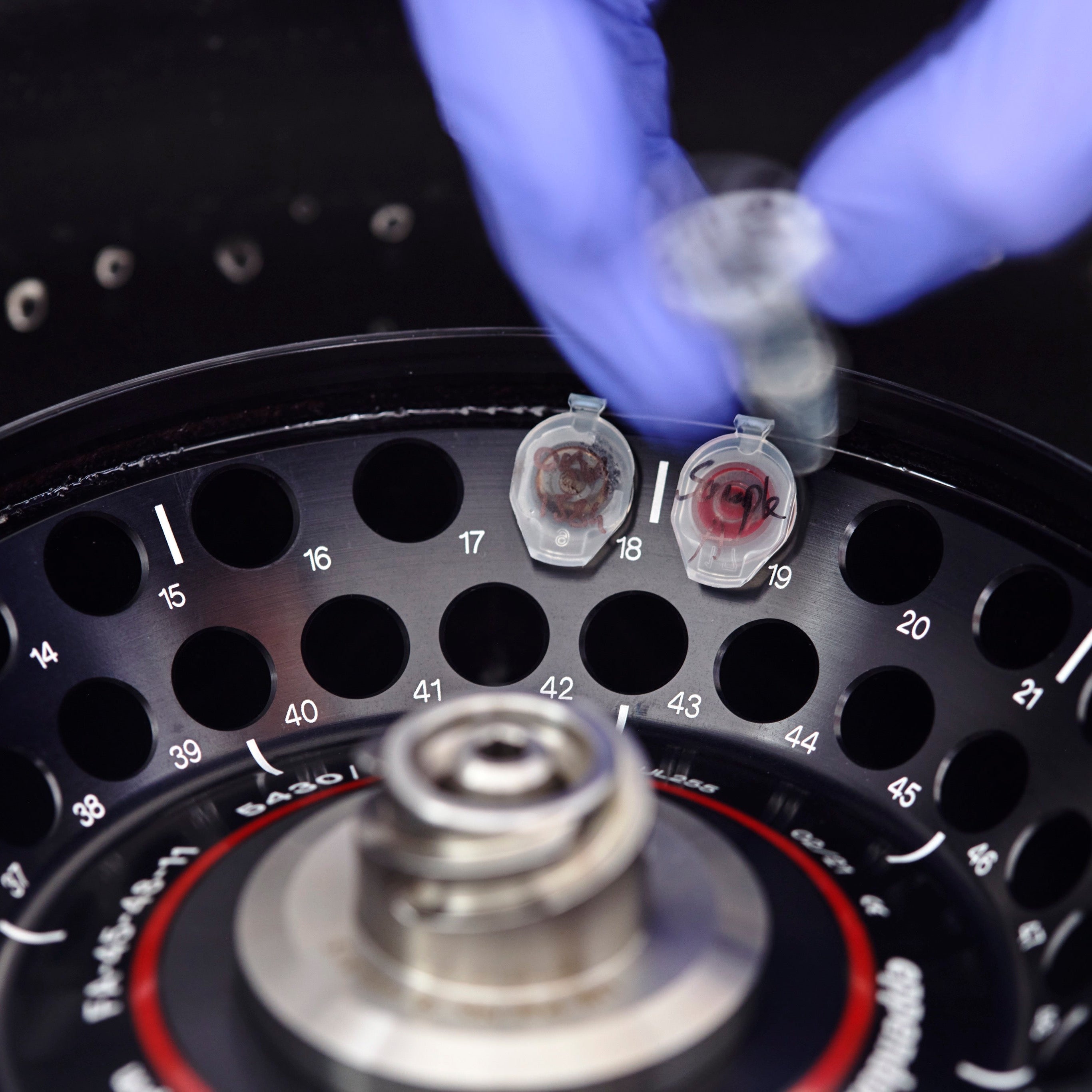

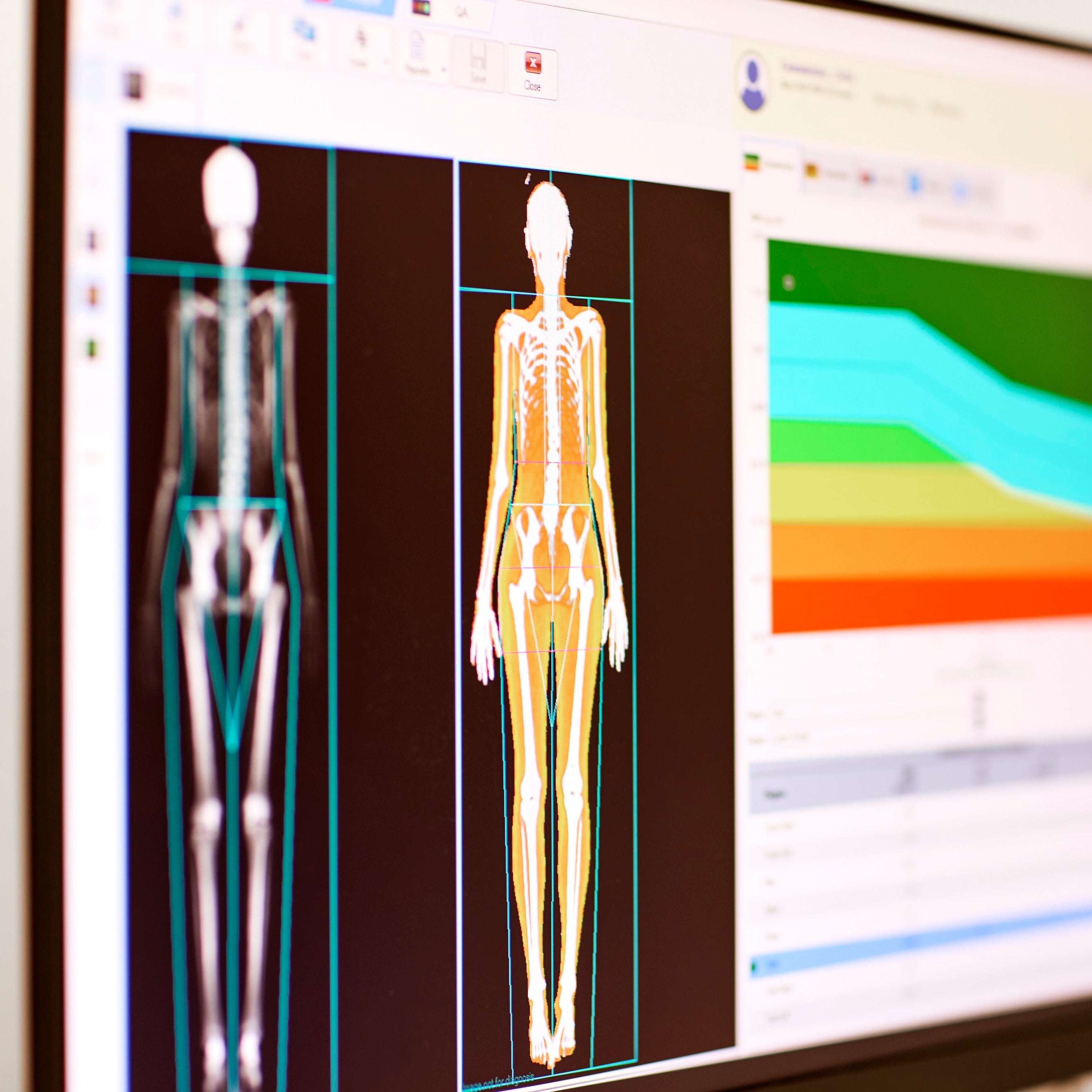

Ultrahuman's ecosystem illustrates the hardware foundation AI health agents require. Their Ring Air tracks sleep, movement, heart rate variability, and skin temperature continuously. The M1 platform adds continuous glucose monitoring through a semi-invasive CGM sensor. Ultrahuman Home measures environmental factors (air quality, temperature, humidity) that impact health but exist outside the body. Their blood testing service (Ultrahuman Blood Vision) adds 100+ biomarkers to the data stream.

This multi-device approach addresses a fundamental limitation of AI health guidance: you can only analyze what you measure. A conversational AI might ask sophisticated questions about your sleep quality, but without objective data on sleep stages, HRV during sleep, and environmental conditions in your bedroom, its recommendations remain partially informed guesses.

Ultrahuman's strategy (integrating multiple hardware devices into a unified platform) creates the data substrate that AI agents like Google's prototype need to function optimally. The correlation between glucose response (M1) and recovery metrics (Ring Air) or environmental factors (Home) enables insights impossible from any single data source.

But this ecosystem approach introduces new complexities. More devices mean more data collection points, more potential privacy vulnerabilities, and more opportunities for measurement error to compound across the system. When an AI agent synthesizes inputs from a smart ring, CGM, environmental monitor, and blood tests, how does it weight conflicting signals? What happens when one device malfunctions but continues feeding data into the analysis pipeline?

From Research to Reality: The Syd.life Case Study

Syd.life represents a different approach: deploying AI health agents today, without waiting for comprehensive hardware ecosystems or years of validation. The platform leverages over 1 million research papers to provide personalized preventive health recommendations, positioning itself as an "AI Life Quality Mentor" that addresses nine life dimensions from cognitive performance to financial health.

User reviews on Trustpilot show a 4.6-star rating, with customers praising the platform's research-backed recommendations and AI conversational interface. One user described how genetic testing combined with Syd's nutritional guidance eliminated their mid-morning cravings and unexpectedly improved their sleep. Another, diagnosed with cancer, credited the platform with helping them proactively manage their condition while becoming "fitter, healthier and happier."

The enthusiastic testimonials reflect what many health optimization enthusiasts crave: personalized, science-backed guidance that cuts through wellness noise. But they also reveal the first major caveat of AI health agents: the gap between correlation and causation in user outcomes.

Did Syd's recommendations cause the improved sleep, or did the user's heightened attention to health behaviors (catalyzed by any structured intervention) drive the change? Without controlled trials, we're observing associations, not proven efficacy. This matters enormously when deciding whether to follow AI-generated health advice over established medical guidance.

The Trust Paradox: Grounding vs. Hallucination

Google's research emphasizes that their Domain Expert Agent accesses authoritative sources to "ground its responses in verifiable facts." This architectural choice addresses one of the most dangerous failure modes in AI health guidance: confident-sounding but incorrect medical information.

Yet grounding alone doesn't eliminate the problem entirely. Even with access to quality sources, AI systems can:

- Misinterpret context: A study showing benefits of fasting in metabolically healthy adults might be inappropriately applied to someone with blood sugar regulation issues.

- Miss contraindications: Recommendations that seem evidence-based in isolation may be dangerous when combined with medications or conditions not fully captured in the AI's context window.

- Overfit to correlations: Large language models can identify patterns in training data that don't reflect genuine causal relationships, generating advice that sounds scientifically grounded but isn't.

Google's research acknowledges these limitations implicitly by noting this is "a conceptual framework for research purposes, and should not be considered a description of any specific product." Translation: extensive additional validation would be required before clinical deployment.

Commercial platforms operating today don't have the luxury of that disclaimer. When Syd Life AI tells users it draws from "1.2 million scientific research papers," consumers may reasonably assume the same level of validation Google applied (over 1,100 hours of expert review). Whether that's actually occurred isn't disclosed.

The Personalization Problem: Data Limitations

Both Google's research prototype and platforms like Syd.life promise personalization, but they face a fundamental constraint: they only know what they can measure.

Google's system was validated using Fitbit data, questionnaires, and blood tests. Comprehensive, but far from complete. Even Ultrahuman's more extensive ecosystem, combining wearables, CGM, environmental sensors, and blood biomarkers, captures only a fraction of the biological complexity driving health outcomes. Missing are:

- Genetic variants affecting drug metabolism or nutrient absorption (unless separately tested)

- Microbiome composition influencing everything from mood to immune function

- Detailed environmental exposures (workplace stress, relationships, air quality beyond the home)

- Social determinants of health that often outweigh individual behavior

Ultrahuman's approach of layering multiple measurement modalities helps: the intersection of glucose response and sleep quality reveals insights neither metric provides alone. But even comprehensive measurement differs from complete understanding. The danger is that both users and AI systems mistake "data-driven" for "comprehensive," making confident recommendations based on incomplete information.

Google's research tested personalization by having clinicians and end-users evaluate whether responses appropriately considered individual context. The Domain Expert Agent scored significantly higher than baseline models. But "significantly better than a generic AI" is not the same as "as good as a human specialist reviewing your complete medical history."

The Behavior Change Bottleneck

Perhaps the most compelling finding from Google's research: users prioritize actionable guidance above all else. The Health Coach Agent, designed around motivational interviewing principles, consistently outperformed baseline models in driving goal-setting and sustained behavior change.

This aligns with decades of behavioral science research showing that information alone rarely changes behavior. What matters is implementation support - the "how," not just the "what."

Here, AI health agents show genuine promise. Unlike human coaches limited by time and availability, AI can provide 24/7 support, indefinite patience, and immediate responses to motivation lapses. Syd users frequently mention accountability as a key benefit, with one noting they "feel comfort in knowing that whenever I need, I can turn to syd".

Ultrahuman's approach complements this with real-time nudges. When your movement index drops or glucose spikes after a meal, the system prompts immediate action. This closed-loop feedback (measure, analyze, nudge, measure again) theoretically accelerates behavior change by making consequences visible and immediate.

But there's a caveat: AI coaching optimizes for engagement, not necessarily for outcomes. An AI trained to keep users interacting with the platform may learn to provide emotionally validating responses that feel supportive but don't challenge ineffective behaviors. It might recommend small, achievable changes that build confidence. Or it might simply avoid suggesting difficult interventions users might resist.

Google's research evaluated expert adherence to coaching principles and user satisfaction, but didn't track long-term behavior change or health outcomes. That longitudinal data would be essential for understanding whether AI coaching actually works beyond the initial enthusiasm phase.

The Regulatory Vacuum

Here's where the gap between research and reality becomes most concerning: AI health agents currently operate in a regulatory gray zone.

If an AI system diagnoses disease or recommends treatment, it's likely a medical device requiring FDA clearance. But if it offers "wellness guidance" or "lifestyle recommendations," it may fall outside regulatory oversight entirely, even if users treat those recommendations as medical advice.

Google's research explicitly disclaims any current product, noting that "any real-world application would be subject to a separate design, validation, and review process." That's appropriate caution.

Commercial platforms like Syd.life and Ultrahuman market themselves as preventive health and performance optimization tools, carefully avoiding diagnostic or treatment claims. They describe helping users "optimize metabolic fitness" or "improve life quality": wellness framing that sidesteps medical device regulation while still influencing consequential health decisions.

The risk: users making important health choices based on AI recommendations that haven't undergone the validation required for actual medical devices. Someone delaying necessary medical care because an AI wellness platform suggests lifestyle interventions may work. Someone experiencing adverse effects from supplements recommended without full consideration of their medication regimen. Someone over-exercising based on recovery metrics they misunderstand, causing injury rather than adaptation.

These aren't hypothetical concerns. They're predictable consequences of powerful AI systems providing health guidance without the safeguards required of human practitioners.

The Data Privacy Dimension

AI health agents require intimate data to personalize recommendations: sleep patterns, activity levels, dietary habits, stress indicators, genetic information, blood biomarkers. Ultrahuman's ecosystem adds glucose responses to every meal, environmental conditions in your home, and continuous biometric streaming from wearables.

Google's research used an IRB-reviewed dataset where users provided informed consent. Commercial platforms have privacy policies governing data use. But consider the incentives: health data is extraordinarily valuable for insurance pricing, pharmaceutical marketing, employment decisions, and consumer targeting.

AI companies will face persistent pressure to monetize that data in ways users may not fully anticipate when signing up for a wellness app. The question isn't whether companies will handle data responsibly. Many genuinely intend to. The question is what happens when business models shift, companies get acquired, or financial pressure mounts.

The multi-agent architecture Google proposes, with specialized agents collaborating on user queries, creates additional privacy considerations. Each agent potentially processes and stores user data. Each integration point between agents represents a potential vulnerability. Each update to the orchestration logic could change how data flows through the system.

Ultrahuman's ecosystem approach magnifies this challenge. Data flows from Ring Air to app, from M1 sensor to cloud, from Home monitor to servers, from blood tests to profile. Each device, each transmission, each storage location introduces risk. A comprehensive health ecosystem means comprehensive data exposure.

For executives and entrepreneurs optimizing their health, the question isn't just "Will this AI improve my biomarkers?" It's also "Who else gets access to the data showing my stress patterns, sleep quality, glucose responses, and genetic predispositions?"

Strategic Implications: Questions to Ask

The Google research, Syd's AI-first deployment, and Ultrahuman's ecosystem approach represent three distinct strategies in rapidly evolving space. More AI health agents will launch in coming months, each claiming to personalize wellness based on your unique data profile.

For leaders evaluating these tools, here are the critical questions the current state of technology demands:

On validation:

- What level of expert review has the system undergone? Google used 1,100+ hours across multiple specialties. What's your platform's equivalent?

- Are health outcome claims based on controlled studies, or user testimonials and correlational data?

- How does the system handle edge cases, contradictory evidence, or situations outside its training distribution?

On architecture:

- Is this a single model trying to do everything, or specialized agents collaborating? The research suggests architecture matters enormously.

- What authoritative sources does the domain knowledge agent access? How current is that information?

- Can you audit the reasoning behind specific recommendations, or is it a black box?

On data collection:

- What data does the system actually have about you? Is it relying on self-reported information, single-source wearables, or multimodal measurements?

- What data does it not have? What decisions might those limitations affect?

- If using multiple devices (like Ultrahuman's approach), how are measurements validated and conflicting signals resolved?

On limitations:

- How does the system handle uncertainty? Does it communicate confidence levels, or present all recommendations with equal authority?

- What's the escalation path when AI guidance is insufficient? Does it recognize when you need human expertise?

- Can you distinguish between well-validated recommendations and speculative suggestions?

On privacy and incentives:

- Who owns your health data? What uses are permitted beyond direct service provision?

- How is the company monetized? If it's not selling subscriptions or hardware, it's likely selling something else.

- What happens to your data if the company is acquired or fails?

- For ecosystem approaches with multiple devices, where does data reside and who has access across the platform?

The Path Forward: Collaboration, Not Replacement

The most important insight from Google's research may be the most obvious: specialized expertise collaborating effectively outperforms general capability applied broadly. That's true for human health teams. It appears to be true for AI systems. It's true for hardware ecosystems like Ultrahuman's. And it's almost certainly true for human-AI collaboration.

The optimal use case for AI health agents probably isn't replacing your doctor, nutritionist, or health coach. It's augmenting them with:

- 24/7 availability for routine questions and motivation support

- Statistical analysis of your personal longitudinal data

- Rapid synthesis of relevant research when facing specific health decisions

- Consistent implementation support for established protocols

- Real-time feedback loops between behavior and biomarkers

Platforms like Syd.life that position AI as a "mentor" rather than a replacement may be closer to the sustainable model. Ultrahuman's ecosystem approach (providing comprehensive measurement infrastructure) addresses the "garbage in, garbage out" problem that limits AI health guidance. Google's research validates the multi-agent architecture that could make AI recommendations more trustworthy.

Each represents a piece of the puzzle. But no one has assembled the complete picture: validated AI agents + comprehensive multimodal measurement + appropriate regulatory oversight + sustainable business models + genuine long-term outcome data.

The Bottom Line

AI health agents represent a genuine breakthrough in personalized health technology. The multi-agent architecture Google validated offers a blueprint for systems that could genuinely augment human health expertise. Hardware ecosystems like Ultrahuman's create the data foundation these AI systems need to function. Early commercial deployments like Syd.life are showing that users want this technology and, in many cases, report benefit from it.

But we're in the early innings. The technology is advancing faster than our ability to validate it, regulate it, or fully understand its limitations. The business models are still forming. The long-term health outcomes are unknown. The privacy implications are evolving. The line between wellness optimization and medical advice remains legally ambiguous.

For executives and entrepreneurs investing in health optimization, the opportunity is real. But so are the risks. Treat AI health agents as powerful tools requiring sophisticated evaluation, not magical solutions requiring only enthusiasm. Demand transparency about validation, limitations, and incentives. Understand what data you're sharing and how comprehensive measurement actually is. Maintain relationships with human health professionals who can provide the judgment AI systems still lack.

The future of preventive health will almost certainly involve AI agents working with comprehensive data ecosystems. But the path from here to there requires navigating both the transformative potential and the legitimate concerns about premature deployment of systems making consequential recommendations based on incomplete data and insufficient validation.

The technology is impressive. The hardware ecosystems are maturing. The user enthusiasm is genuine. The caution is warranted. All of these statements can be true simultaneously.